FAT and Fake AI

When political correctness trips itself up

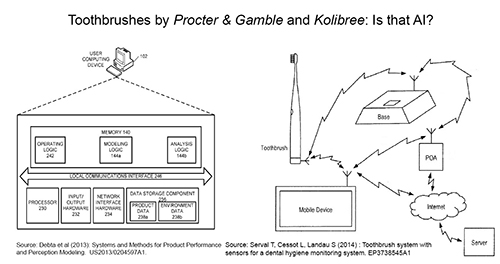

In 2017 James Vincent covered the Computer Electronic Show, CES for Tech Journal The Verge at Las Vegas in 2017 (The Verge, January 4, 2017). There he came across an oral hygiene product which was advertised as “1st toothbrush with artificial intelligence”. On closer enquiry the journalist rejected to write anything about. Finally he wrote the story (“This smart toothbrush claims to have its very own ‘embedded AI’”), and added later a comment (“this toothbrush doesn’t have artificial intelligence”).

Vincent is not alone. In recent years there is a growing malaise among professionals in many disciplines about artificial intelligence (AI), often exaggerated claims, and the people hyping up AI. “Fake AI” is a timely book which promises to look behind the razzmatazz, describe how AI is working, how it goes astray, and offer readers insights to gauge civic responsibility. It turns out that political intentions are no enough.

Editor Frederike Kaltheuner is a known political advocate to embed citizens’ rights in technology. Her latest book (2021) convenes 26 authors who join the editor to “intervene” in sixteen chapters and one annex against fake or pillory pseudoscience, i.e., embodiments of AI that are useless, inaccurate, or even fraudulent. The editor has brought together writers from the worlds of science, arts and industry, Professionals from universities, employees of Google or Visa, single entrepreneurs or consultants contribute with their expertise broadly ranging from computer and natural sciences (7 authors), social sciences or humanities (13), arts (5) and media (2). Contributors are in six countries: the United States (15 authors), EU (DE: 2; IE:1; NL:1; UK: 6) and Nigeria (2). This time, perspectives from other continents are discarded, for example Asia where Shinzo Abe moved forward a policy framework for responsible AI in Japan 2017 or China’s efforts (SCPD 2017; Schmid/Xiong 2021). Needless to say, all authors subscribe to FAT in AI, i.e., they advocate implementing principles of Fairness, Accountability, and Transparency in its use. But – what means “AI”? Some contributors treat it equivalent to machine learning, some as its subset. For some it is a scientific method, for others it is a job description, an industrial application, or specific functionality such as face recognition. Some authors treat AI as input (e.g., an algorithm), others as outcome, e.g. “discrimination”. There is a colourful string of concepts juxtaposed and barely explained or streamlined among the authors such as “race”, “ethnicity”, or for example “black feminisms”. These cohere by a shared mission: explaining AI. But what is it beyond fake, or pseudoscience?

Before starting with this book readers are well advised to make sure that they have a general idea of artificial intelligence. WIPO (2019) offers a useful description: AI encompass techniques such as machine learning, functional applications such as image recognition, and application fields such as fintech or agriculture.

How to recognise “Fake”

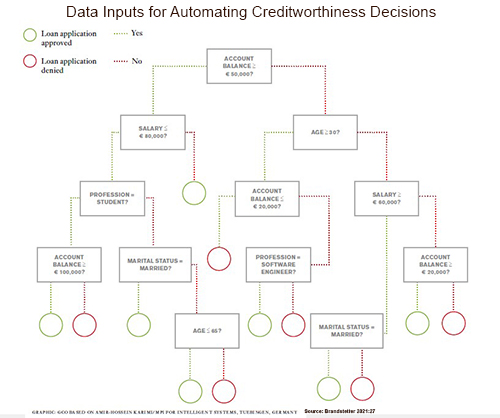

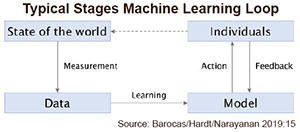

At the outset the editor interviews Arvind Narayanan, Associate Professor of Computer Science at Princeton who identifies fake AI and discerns it from other types. Fake AI, which Narayanan calls “snake oil”, are promises of salvation reminding cargo cult 1) formula (Cox 2011; Feynman 1974; Trapp 2020). Fake is set apart from “pseudoscience” and “misguided research”. Pseudoscience is identified as coquetting with science but operating AI on “fundamentally shaky assumptions”. By contrast, “misquided research” is recognised according to Narayanan as science which betrays its own ideals of serving truth and society. At this point in the interview the reader would like to learn about what is not misguided, in other words correct scientific AI. Alas, the interviewer is not interested in a positive description, but prompts the scientist to move to another topic. Readers are assumed to know how and what Arvind Narayanan and colleagues (Barocas et al 2019; Kilbertus 2020) have contributed to determine “good” scientific AI. For those who are not that familiar with scientific authors who propose “fairness” as a key principle which sets AI apart from fake, pseudoscience or misguided research: it is much more challenging to implement it in machine learning 2) than setting up common semantic filters (e.g. avoiding “race”) or refining traditional assumptions (e.g. who is creditworthy and who not). Kilbertus (2020) proposes an algorithm which regularly reviews its own assumptions acts, against these from time to time, and learns from results. This idea is hard to realise in practice. But let us return to the contents of the book, because its authors show little interest in improvements of AI.

Lost in Bits and Pieces

AI is applied in many fields today, even in arts (Vincent 2019). In this book advertisement, agriculture, financial services, or criminology are dealt with. Self-driving vehicles (B2Bioworld 2022) and digital health (Huss 2018) which are highly important in AI development (WIPO 2019) are barely mentioned. These fields do not readily lend themselves arguably to a critique of “discrimination”.

Amironesei and colleagues, Raji, or Jansen and Cath-Speth focus on components of machine learning: benchmark datasets, annotation heuristics and algorithms. Amironesei et al take as example “ImageNet” which is a database – not introduced further to readers – linked to another (proprietary) English language, lexical database (trademark: WordNet) where words are associated with synonyms. Who seeks will find: the authors are quick to find “depreciative” words which they suspect to guide practical categorisation of images. The authors propose that data training sets should be subjected to an “interpretive socio-ethical analysis” prior to their use for benchmarks. This is an interesting idea, because it counters the predominant approach in machine learning which treats “training data” as objective and uncontaminated by subjective meaning. Recently this view is contested by advocates of civil society who require to delete “hate speech” in social media. But the bloated solution which Amironesei et al favour appears not to extend beyond what is already done by media operators, namely to create human oversight (e.g. an ethics committee), select politically correct terms and exclude undesired meaning by installing semantic filters. A reference to Alexander Reben’s experiment in the same book would have been helpful to provide a deeper sense of “interpretive techniques” (see below) or of human communication. Semantics are but one level and meaning also crucially depends on grammar and pragmatics as every scholar of linguistics knows. Last not least nowhere these authors consider that any “socio-ethical” analysis depends on the cultural framework in which it is used. This is no more visible than in Tulsi Parida’s and Aparna Ashok’s account of जुगाड़ in the book. This Hindi term transcribed as “jugaad” has richer meaning than just the English translation of "techno-solution". According to authors it denotes a low-cost, quick tech-fix and is essential political rhetoric which serves to secure acceptance while glossing over serious flaws of AI implementation in India’s agriculture (see also: Gurumurthy/Bharthur 2019). Amironesei and colleagues ignore in their article Parida/Ashok’s contribution and neglect to relate their observations on the place of the described components in the entire machine process (Barocas et al (2019; Denton et al 2021).

Certainly algorithms are discussed in this book. You know what an algorithm is, don’t you? Deborah Raji contributes her experience from designing and engineering algorithms in Google. Like Frederike Kaltheuner she has been engaged as Fellow for Tech & Society with Mozilla Foundation. Raji focuses on the division of work between AI designers and others in an organisation. In a vivid account the author describes how organisational irresponsibility is nurtured in a hierarchy along pressures to bring to market a product with an algorithm as key component as quick as possible. It is worthwhile also to read her other work (e.g., Raji/Buolamwini 2019) for details and deeper understanding of conclusions in Fake AI.

Fieke Jansen and Corinne Cath-Speth turn to auditing algorithms. Performance assessment and conformity with standards are at the heart of civic demands for external control of social media oligopolies. Public algorithm registers in Amsterdam, Helsinki, and New York are examples that it is possible to implement FAT principles and arrange for public accountability. This is not perfect, however with their focus on proving discrimination, shortcomings, and gaps of these registers Jansen and Cath-Speth pass up the chance to detail how these initiatives could be improved and possibly inform private registers. Raji’s observations are ignored how within organisations AI engineers can create faits accomplis for those downstream. Instead, the authors spread out conclusions about “lack of critical engagement by AI proponents”, “governance-by-database”, or systems “advancing punitive politics that primarily target already vulnerable populations”. This may be true, or it may be hyped up for the purposes of demonstrating critical thinking. The way it is presented across eleven pages does not allow readers to evaluate themselves the accusation or to view AI in a distanced, though positive perspective. Without complementary reading a legitimate overall critique ends in lamenting. There is loose coherence between individual chapters and contributions are lost in fragmented bits and pieces of AI. Uses, functional applications, techniques are juxtaposed without an attempt to introduce more conceptual clarity or allow readers to comprehend the individual authors’ choice.

Going Beyond the “Schrems-Moment”

In 2011 Austrian Facebook user Max Schrems claimed his privacy rights and demanded information stored about him since joining as member in 1998. Facebook Ireland complied and inadvertently sent him full information of more than 1,200 pages which showed that Facebook ignored existing laws of the EU and of Member States. Mr. Schrems was aghast and told Forbes: “I’m just a normal guy who’s been on Facebook for three years. Imagine this in 10 years: every demonstration I’ve been to, my political thoughts, intimate conversations, discussion of illnesses.” In 2018 Frederike Kaltheuner experienced her own “Schrems-Moment” after data trader Quantcast had to disclose private data about her. In Fake AI Kaltheuner retells her 2018 experience and expresses her indignation about “eerily accurate inferences” from everyday behaviours linked up to questionable automated categorisations of her person. In 2021 she goes beyond that moment and reflects about identity ascription (“Who am I as data?”). In 2021 she goes beyond that moment and philosophizes about “Who am I as data?”. This is a surprising in a publication which intends to be an "intervention". What followed from her indignation in 2018?

At the time of writing the first time (Kaltheuner 2018) she worked for Privacy International. This UK NGO subsequently sued (2018) Quantcast and others for breaches of European privacy laws 3). Three years later Ms. Kaltheuner is remarkably silent about the outcome of the complaint with British, Irish, and French data authorities. What were reactions or arguments of the data watchdogs handling the complaint?She prefers to pursue ontological questions of “identity” and happily proclaims that “it is impossible to detect someone’s gender, ethnicity, or sexual orientation using AI techniques” What makes her so sure that AI (for example guiding biometrics) is not able to identify individuals with these social categories 4)? Moreover, what is special about data traders like Quantcast besides grabbing private data and assembling fragments to an alleged profile of a determinate citizen?

Readers find answers not in this book, but in documents collected by Max Schrems and pub,lications by Austrian NOYB (an acronym for None of Your Business). These are fighting for citizens’ privacy and litigating against Facebook as well as other data brokers for more than a decade 5). Two years after Privacy International NOYB (2020) took a similar step like the British NGO. NOYB accused Google LLC to breach European GDPR 6) with French data watchdog CNIL. In February CNIL announced its decision: Google had been violating “the rules on international data transfer to the U.S.”. A deeper description of Quantcast would have been appropriate to explore the accountability principle in case of data transfers to other jursdictions. Quantacst is not just another British, Irish or French data trader which is subjected to EU laws. It is a U.S. company which follows the example of its global American role models and sails in their slipstream. Kaltheuner suggests to “rethink identity”, but why does she not query evading regulations by transferring private data to another jurisdiction, in other words juggling data between different states. Several critics point out societal risks of stateless data, when the data broker decides what is legitimate use, who has access rights, or how much is disclosed.

There are some exceptions where Fake AI is reaching beyond indignation and cherished beliefs of political correctness. The chapter by Nigerian authors Borokini and Oloyede could have been an eye-opener for other authors in the book.The chapter by Nigerian authors Borokini and Oloyede could have been an eye-opener for other authors in the book.

Another dimension of “discrimination” – Access ot finance in Nigeria

Many authors of Fake AI invoke “discrimination” as motivating their intervention. They treat discrimination per se as “bad” and therefore to be battled. This is poorly reflected. Allegation of “discrimination” through AI may be simply based on faulty understanding of a mathematical method. The classic example of statistics is Simpson’s Paradox. Or, an accusation of discrimination may be caused by lack of transparency of a decision based on an algorithm. Thus, in 2019 a New York couple suspected Apple and Goldman Sachs to unfairly discriminate the spouse with Apple Card, a mobile credit card service. This led to media scrutiny and public investigation 7). Two years later authorities concluded that it was true that the bank was not transparent, but there was no violation of federal laws of equal access to credit. Last not least “discrimination” might be desired, for example if privileging handicapped people or those with rare diseases (diagnosed by face recognition software – see Gelbman 2018). Positive discrimination can be beneficial for underserved populations. This has been the motivation of the Nigerian government to enact the financial inclusion policy in 2012.

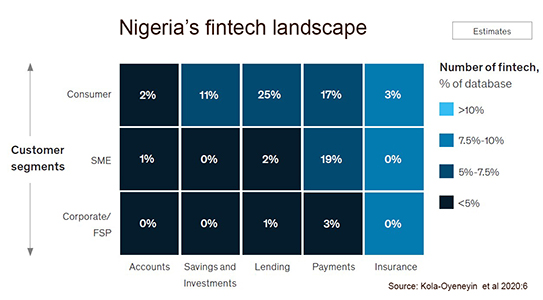

Borokini/Oloyede and colleagues (Adaramola et al 2020; Kola-Oyeneyin et al 2020) describe the landscape in Africa’s most populous country which creates a boom of fintechs or providers of digital financial services with limited respect for citizens’ privacy or rights taken for granted by authors from Europe or the United States. Forty to sixty million Nigerians are estimated to have no access to financial services by traditional banks which is thought to be responsible for growing inequality, perpetuating poverty and threats to food supply. According to the International Monetary Fund (February 9, 2022) a majority of these people is in agriculture which accounts for 26% of real GDP and employs 45% of the country’s labour force predominantly with low income and low education. This sector badly needs to improve food yields. In Nigeria there is a “general absence of secure land titles” which in turn limits farmers’ access to banks. They need it to lend money for purchasing fertilizer, seeds, or for example set up storage facilities which reduce significant rotting of crops.

Borokini and Oloyede point out, that on paper Nigeria has many laws addressed to the protection of citizens’ privacy, but these are rarely enforced. For example, Nigerian fintechs regularly force owners of smartphones to provide unlimited consent to share all data on their device which includes data access by third parties. It is not unusual that a Nigerian citizen is rated high risk lender, because he or she has no smartphone or if denying unlimited access to its data. This is hardly warranted given that only 2% of transactions are losses due to fraud (eFinA 2020), but it is tolerated by the authorities, even if it subverts their own policies. A common answer is rampant corruption. Transparency International’s Corruption Perception Index 2021 ranks Nigeria 24 (high corruption = 0; absence = 100). It should be noted that this happens in a state which is not an autocracy, but with political system templated on the American one.

The Nigerian examples remind descriptions by other authors in the book, of privacy transgression, sloppy benchmark datasets, and ignorance of technology consequences outside the United States 8) at lower hierarchy levels of global platform brokers as well as collusion of policymakers. What can be done, if local or national governance fail? How is it possible to ensure or restore citizens’ trust in AI, if political safeguards fail at home?

Cowhey and Aronson (2017) have dealt with such questions arising from digital technologies. First, they argue to include more the private sector. Then, they ask how governance may be induced from outside if it fails in organisations or even in states 9). Recommendation by these authors are to involve the foreign private companies (e.g., digital platform operators) in solutions, and, on a general level to leverage foreign trade in bringing about governance on local or national levels. Germany’s chain supply law may be a template. The recent proclamation of the African Continental Free Trade Area and engagements by Western automotive industries are other examples (B2Bioworld 2021). Admittedly, cross-border thinking is not a strength of the authors of Fake AI.

Artists approach AI

An unusual, but innovative approach is to include the perspective of artists in a debate which is mostly focused on technology, science, and politics. Back in 1998 Margaret Boden (1998) of the University of Sussex described AI art as creativity which creates previously impossible ideas by combining familiar ones and exploring conceptual spaces. Frederike Kaltheuner encouraged Carlos Romo-Melgar, John Philip Sage and Roxy Zeiher, as well as Alexander Reben and Adam Harvey to take the plunge.

Romo-Melgar, Sage and Zeiher are three graphic designer who gained a reputation in illustrating books and visualizing art works. It is not quite comprehensible why they are assigned a minor role in the annex of the printed and e-book and are not introduced with their biographies. They contributed the lay-out. Romo-Melgar, Sage and Zeiher aimed at creating the “(first) book designed by an AI” (see example). Using a Generative Adversarial Network (GAN) of which we learn that it is a software “that-doesn’t-know how to design” the designers obviously were successful to visualize the title of the book and its core message: “what is seemingly autonomous, is in reality disguising the work of humans”. Stains and streaks in pages and overlaid greyish plasterwork imitations underlie the title pages. The conventional function of layout is obviously reversed by GAN: the content succumbed to the design. The individual contributions are not comfortably reading with ragged margins or text bleeding over the pages. All letters “AI, ai, or Ai” even within words are substituted by random renderings thereby carrying the overall political intent. However, the graphic designers compromised with AI output using “Wizard of OZ technique” from time to time. The letters are larger than usual and bold type. Some are negatively blended over black spaces usually marked up as poetic interjections or literature references. In the end it is not the designers but the publisher and editor who are accountable for burdensome reading.

Romo-Melgar, Sage and Zeiher are three graphic designer who gained a reputation in illustrating books and visualizing art works. It is not quite comprehensible why they are assigned a minor role in the annex of the printed and e-book and are not introduced with their biographies. They contributed the lay-out. Romo-Melgar, Sage and Zeiher aimed at creating the “(first) book designed by an AI” (see example). Using a Generative Adversarial Network (GAN) of which we learn that it is a software “that-doesn’t-know how to design” the designers obviously were successful to visualize the title of the book and its core message: “what is seemingly autonomous, is in reality disguising the work of humans”. Stains and streaks in pages and overlaid greyish plasterwork imitations underlie the title pages. The conventional function of layout is obviously reversed by GAN: the content succumbed to the design. The individual contributions are not comfortably reading with ragged margins or text bleeding over the pages. All letters “AI, ai, or Ai” even within words are substituted by random renderings thereby carrying the overall political intent. However, the graphic designers compromised with AI output using “Wizard of OZ technique” from time to time. The letters are larger than usual and bold type. Some are negatively blended over black spaces usually marked up as poetic interjections or literature references. In the end it is not the designers but the publisher and editor who are accountable for burdensome reading.

In his chapter artist Alexander Reben who graduated in Robotics and Applied Mathematics also experiments on AI software. He programmed two chatbots to interact with each other. Chatbots are a hot topic in customer care. A rising number of companies uses these robots. Reben finds that chatbot communication is essentially soliloquized talk contrary to promises that they match human talk. He also programmed automated fortune telling and wrapped the resulting messages into “fortune cookies”. Some of the machine speech and messages are pure nonsense. Reben observes that human recipients nevertheless make sense out of nonsense. His advice to readers is a little bit naïve in times when consumers are hassled by automated callings. In “take on the ceiling” he cautions recipients to query themselves: “Am I projecting human capabilities onto it which may be false”? In the fifties and sixties Harold Garfinkel and other ethnomethodologists described the documentary method of interpretation as a basic interpretive procedure in human communication. Rather than breaking off communication this technique allows people to continue communication with each other based on the assumption of a shared world. This is also the Achilles heel of automated speech and machine fortune telling: If you suspect a chatbot or a machine as counterpart do not query yourself, just talk nonsense and you will soon get rid or be connected to a human operator.

Adam Harvey who graduated in telecommunications is the third artist. Unlike the designer team and Reben Harvey explores a recurrent topic in arts: “What is a face?”. Harvey opts to view it not from arts, but from technology focused on a subspecialty, (biometric) face recognition. Here he enquires the difference between human and machine vision. Concentrating on face detection he is one of the few authors in this book who ever considers the existence of binding standards for computer vision outputs (see Grother et al 2019). In discussing the minimal number of pixels for the reproduction of a human face Harvey does not distinguish well issues of face identification from operations of consecutive process steps for recognition (face alignment, feature extraction, feature matching). With that he is missing the importance of modeling a face (regularly with several photos), matching a particular photo to the model, or retrieving a particular “face” from a database which is substantially more than just one including “thousands of faces”, because these may be real or simulated ones for the purpose at hand. Sticking to image resolution he calls to include other features of face recognition as well, for example spectral bandwith, features which are already included in industry solutions (Wu 2016 and Siemens Corporate Research at Princeton).

Notwithstanding Harvey points to a major weakness in conventional machine vision which is ignoring social context. This is a hot topic in automated face recognition (Thoma et al 2017), but why does he not discuss face expressions which indicate context while preserving identification of a particular individual at the same time? A recent exhibition of Renaissance portraits offers inspiration and insight from the perspective of arts to pursue those questions at Amsterdam’s Rijksmuseum. Sara van Dijk and Matthias Ubl (2021) highlight that painted Renaissance portraits regularly go beyond life-true physical appearance and display societal norms which in turn locate the particular person in his or her context 10).

If such comparisons between machined faces and arts are too demanding Apple’s iPhone face recognition app could have offered simpler examples for an enquiry about a face. After shooting a photo with the smartphone camera, the app detects and analyses characteristics of faces. Then the software runs through the photo archive uploaded into Apple’s cloud, looking for similarity of features, matching the retrieved with the query photo. The last step is verification, i.e., the decision, if similarity equals physical identity: The result is presented to the iPhone owner’s judgement: “Same or different Person?” – Click: “Same – Different – Not Sure”. Of course, you (and an AI trained software) decrypts much more in the presentation than just physical faces or the person's name as identity tag. There is a child with its mother, both are young, of Asian ethnicity, not at home, but in a restaurant outside the United States, and so on. Beyond pixels and mirroring “a face” AI software is more powerful than just biometric identification. It is about what is in a face and its relation to other items in the photo and the observer that AI is uncovering health states, weighing purchasing power, or guessing social relationships. As the iPhone app proves: at some point or another in this process human inputs are indispensable.

If such comparisons between machined faces and arts are too demanding Apple’s iPhone face recognition app could have offered simpler examples for an enquiry about a face. After shooting a photo with the smartphone camera, the app detects and analyses characteristics of faces. Then the software runs through the photo archive uploaded into Apple’s cloud, looking for similarity of features, matching the retrieved with the query photo. The last step is verification, i.e., the decision, if similarity equals physical identity: The result is presented to the iPhone owner’s judgement: “Same or different Person?” – Click: “Same – Different – Not Sure”. Of course, you (and an AI trained software) decrypts much more in the presentation than just physical faces or the person's name as identity tag. There is a child with its mother, both are young, of Asian ethnicity, not at home, but in a restaurant outside the United States, and so on. Beyond pixels and mirroring “a face” AI software is more powerful than just biometric identification. It is about what is in a face and its relation to other items in the photo and the observer that AI is uncovering health states, weighing purchasing power, or guessing social relationships. As the iPhone app proves: at some point or another in this process human inputs are indispensable.

Epilogue

In April of last year OECD (Galindo et al 2021) published an overview over national AI strategies and its own principles. It is telling that the authors of Fake AI nowhere refer in their political book to such extraordinary cross-border stocktaking, nor do they even attempt to discuss progress in international governance. While the authors call for FAT and responsible AI, it is very clear that decisions must be taken in politics not science, because social uses of AI are based on stakeholder negotiations between citizens, governments and industry. Examples from Nigeria, India, or inside Silicon Valley show that science has its limits to counter misguided AI. Attempting to write a “poetic guide” with interspersed dada-phrases instead of delivering a clear political statement is blurring genres and obfuscates personal credo. At best it inhibits impartial and rational decisions between “AI or black feminisms”.

A prerequisite for participation is some overall understanding of AI, its workings and its place in negotiations. Cowhey and Aronson (2017) show that there are templates for international governance and principles emerge for enlarging and regulating FAT (B2Bioworld 2016). Taking note of these approaches requires a change in perspective away from self-affirmation of political correctness or proxy aspirations. With its arbitrarily chosen bits and pieces approach authors leave readers outside computer or cognitive sciences almost entirely up to themselves. AI remains as evasive as the morphed “AI” letters spread throughout the book. James Vincent’s account of covering a hyped toothbrush is an innocuous but telling example of how beliefs and political correctness trip itself up.

Back in 2017 the journalist was under pressure to cover CES 2017 at Las Vegas near time. His former articles evidence a good sense for an inflated PR presentation. However, more than three years later he just re-tells his experience and does not bother to follow up his own observation. In the original article (The Verge January 4, emphasis original) Vincent concluded: “It’s not technically lying to describe these gadgets as “artificial intelligence,” it’s hardly truthful either.”

What is this truth, or more precise this peculiar tension between technology, compliance with law, and what is presented as AI? In the case of the tootbrush, why is it not a technological lie? How does the presentation not violate the law? And whose truth is it? A closer look reveals that Kolibree SAS (Serval et al 2014) described its invention in several patents between 2014 and 2016. This may be the part of “law” Vincent alludes to. Most explicit with regard to “AI” is the patent of 2016. Here it may be enquired, if or why the toothbrush is not technically a lie. The background to Kolibree’s appearance at CES 2017 was that Procter & Gamble was fast on Kolibree's heels at the time? Early in the last decade (Debta et al 2013) the Ohio company had worked on perception modeling of hygiene products including a toothbrush but did not manage to put its AI inspired product on the market in 2017. Then, this presentation could be taken as AI hype. Just jotting down an old story without any follow up is not really living up to readers' expectations. It may not be AI, but it is up to the critic to argue his initial verdict. Otherwise he behaves not better than those who fake and twist AI in Narayanan's typology: Just do it. If challenged, forget what you said. It was yesterday. You always can excuse for being wrong.

Wolf G Kroner

Footnote/s

1) Cargo cult is an umbrella term for religious movements preaching human fulfillment of indigeneous people by the arrival of Western goods by ships or planes of the white man (Turner HW in Hinnels 1984). Thus, building docks or airstrips and respective worship rituals were thought to spur the promised new order. Originally observed as phenomenon of colonization in Irian Jaya, Papua New Guinea and Melanesia, cargo cult followers have been maintaining a central characteristic of believers until today, i.e. imitating the conduct of the white man without a deeper understanding of global trade or value chains. Though most Melanesian following the cult understand today that cargo is not handed to the white man by God, anthropologists (Cox 2011; Otto 2009) show that they nevertheless hold on to unthinking initiation (e.g. falling for Ponzi scams) believing to get rich fast.

2) It is important to keep in mind that from a technical perspective AI is a technological subset and not identical to machine learning. Several authors do not make the distinction between machine learning based on current statistics and employing artificial intelligence tools which require other procedures.

3) That year UK Privacy International submitted the complaint to the UK, Irish and French authorities. Accusation of breaching GDPR was targeted against ad data traders Quantcast, Criteo, and Tapad.

4) Biometric AI software performs remarkably well to identify these categories of single persons. Guided by AI Israel state organisations as well as private businesses extensively use AI software which is able to identify persons sorted to general social categories. Thus “Arab” ethnicity is easily recognizable combining personal photos with names and birth place in Israeli passports. See also: Stern YZ (2014): Is "Israeli" a Nationality? Jewish Telegraph Agency, March 3; B2Bioworld (2021): Human Voices Re-valued. September.

5) Max Schrems discovered shocking violations of his privacy as well as breaches of contractual relations when he required Facebook to disclose information stored about him as “member”. Inadvertently Facebook handed over to him all data stored which also included data he ordered to be deleted. In the internet he documented the trajectory between his “moment” in 2011 and 2014 litigating with Facebook. This is also documented by NOYB.

At some point in the legal disputes Facebook lawyers questioned, if Mr. Schrems was entitled at all to invoke privacy given that he should not be regarded as consumer to whom GDPR applies, but as someone in a business role, because Mr. Schrems collected money to fund the litigation.

6) GDPR refers to the European Union's Data Protection Law Enforcement Directive which became effective in the Member States in May 25, 2018 (EU 2018) and is currently debated as an exemplar for regulations in other jurisdictions such as the United States.

7) Recently Vibbert et al (2022) provided an overview on evolving Privacy Laws in the United States (several Federal States), France, Turkey, China/Hong Kong, and Québec. One observation was that legislators outside the EU tend to take up provisions of GDPR.

8) In 2019 a report in the New York Times about violation of the American Equal Credit Opportunity Act by Apple and Goldman Sachs Card initiated an investigation by the New York State Department of Financial Services, if the Apple Card services was biased against gender. In March 2021 the watchdog report refuted the allegations on the basis of a statistical analysis of 400,000 AppleCard applicants and the bank’s explanation of its decision in the incriminated case. In addition to sex several other criteria were applied which demonstrated in total that the bank’s decision was not based on discriminating gender. While not violating laws the bank demonstrated that it had applied its credit terms to fair credit scoring but had to admit that its decision lacked transparency to the customer which had led to policy changes in the wake of media scrutiny in 2019. While the case was closed the investigation pointed to historical discrimination which may perpetuate current credit scoring. This is the case when a borrower pays off a loan which is regularly not mentioned in a credit report in contrast to information on unpaid loans. The report warned that including alternative data in rating creditworthiness like monthly utility payments or web browsing history could “enhance or tarnish credit history”.

9) In a recent report by eFinA, Nigeria’s agency responsible to implement and monitor financial inclusion, it was admitted that that there are still 38 million citizens excluded, for example from digital payments even after ten years of running the program.

10) The exhibition does not just allow analyses of faces long gone which are preserved by painters, but also provides insights with faces, pose, and expression with contemporary persons. van Dijk and Ubl (2021:80) take a photo of Владимир Путин (Vladimir Putin) on horseback which displays “a man firmly in control of the reins of power and of his own destiny”. Reflecting further they conclude (ibid. 104) “recognition of the rider as Vladimir Putin gives the scene a different slant altogether. It is a question of interpretation. Every reading of an image, be it a photograph, painting, print or sculpture, is coloureds, by what we know about the sitter and the maker, by tradition, but also by our knowledge and interests”.

Reference/s

Adaramola V, Borokini F, Omayi M, Ojedokun O, Tojola Y, Bolakale M, Faya M, Salami A, Chindah RA, Kolawole O, Sarumi A, Oloyede R, Odeshina N, Anonymous (2020): Digital Lending. Inside the pervasive Practice of LendTechs in Nigeria. February: TechHive Advisory Ltd.

B2Bioworld (2016): Good Practice for Robotics and Artificial Intelligence Applications. October.

B2Bioworld (2021): German Automotive Industry: Pulling Strings Together in Africa. December.

B2Bioworld (2022): Hello Taxi! Do you understand me? January.

Barocas S, Hardt M, Narayanan A (2019): Fairness and Machine Learning. Limitations and Opportunities.

Boden MA (1998): Creativity and artificial intelligence. Artificial Intelligence, August: 347-356.

Brandstetter T (2021): Not without a reason! Max-Planck-Research, September: 25-30.

Cowhey PF, Aronson JD (2017): Digital DNA – Disruption and challenges for global governance. New York: Oxford University Press.

Cox JCN (2011). Deception and disillusionment: fast money schemes in Papua New Guinea. PhD thesis, School of Social and Political Science, The University of Melbourne.

Debta K, Nobis PF, Stassen B, Engelmohr R, Wilhelm J, Doll AF, Claire-Zimmet KL, von Koppenfels R, Kressmann F, Wolf M (2013): Systems and Methods for Product Performance and Perception Modeling. US2013/0204597A1, August 8: Procter & Gamble.

Denton E, Hanna A, Amironesei R, Smart A, Ervin HN (2021): On the genealogy of machine learning datasets: A critical history of ImageNet. Big Data & Society, July: 1-14.

European Commission EU (2018): Data Protection. Rules for the protection of personal data inside and outside the EU. Accessed January 2019.

Feynman RP (1974): Cargo Cult Science. Engineering and Science. June: 10-13.

Galindo L, Perset K, Sheeka F (2021): An overview of national AI strategies and policies. Going Digital Toolkit Note, No. 14. April 8: OECD.

Gelbman D (2018): Crowdsourcing Actionable Health Diagnoses. B2Bioworld, August.

Grother P, Ngan M, Hanaoka K (2018): Ongoing Face Recognition Vendor Test (FRVT) Part 2: Identification. Gaithersburg MD, November: National Institute of Standards and Technology.

Gurumurthy A, Bharthur D (2019): Artificial Intelligence in Indian Agriculture. New Delhi, November: Friedrich-Ebert-Stiftung.

Huss R (2018): AI enabled digital pathology: Correlate anything with everything? B2Bioworld, October.

Kaltheuner F (2017): Towards additional guidance on profiling and automated decision-making in the GDPR.

Kaltheuner F (2018): I asked an online tracking company for all of my data and here's what I found. November 7: Privacy International.

Kaltheuner F ed (2021): Fake AI. Manchester, December 14.

Kilbertus N (2020): Beyond traditional assumptions in fair machine learning. PhD Thesis October, University of Cambridge.

Kola-Oyeneyin E, Kuyoro M, Olanrewaju T (2020): Harnessing Nigeria’s fintech potential. London/Lagos, September 23: McKinsey.

NOYB (2020): Plainte au tître de l’Article 77(1) du RGPD – Complaint under Article 77(1) GDPR. Dirigée contre Google LLC auprès de la Commission Nationale Informatique et Libertés dite "CNIL". Mai.

Raji ID, Buolamwini J (2019): Actionable auditing: Investigating the Impact of Publicly Naming Biased Performance Results of Commercial AI Products. Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society.

Schmid RD, Xiong X (2021): Bioscience Trends in China’s New Five-Year Plan: Personalized and Telemedicine, Ecological Transformation, Brain Research and AI are Hot Topics. CheManager International, December 4.

Serval T, Cessot L, Landau S (2014) : Toothbrush system with sensors for a dental hygiene monitoring system. EP3738545A1. Neuilly-sur-Seine, April 4: Kolibree SAS.

State Council on Printing and Distributing 国务院关于印发 SCPD (2017): 新一代人工智能发展规划的通知 (New Generation of Artificial Intelligence Development Plan). July 8. English Translation here.

Thoma S, Rettinger A, Both F (2017): Towards Holistic Concept Representations: Embedding Relational Knowledge, Visual Attributes, and Distributional Word Semantics. Karlsruhe Institute of Technology. Institute of Applied Informatics and Formal Description Methods. October 21.

Trapp M (2020): Good or Unusable Artificial Intelligence for Autonomous Driving. B2Bioworld, June.

World Intellectual Property Organization WIPO (2019): Technology Trends 2019 – Artificial Intelligence. Genève January 30.

Van Dijk S, Ubl M (2021): Remember Me. Renaissance Portraits. Amsterdam, October : Rijksmuseum.

Vibbert J, Koseki H, Raylesberg JT, Vermosen C (2022): Global Privacy Law Updates in 2022. February 9: Arnold & Porter.

Vincent J (2019): A never-ending stream of AI art goes up for auction. The Verge, March 5.

Wu Z (2016): Human Re-Identification. Springer.

Source: Wolf G Kroner

This article by B2Bioworld® is free of charge. Please note: this article including pictures etc. are copyrighted. If you like to re-publish it in part or entirely, you can apply for a licence here.

To receive an alert of B2Bioworld® publications from time to time, please sign-up. Enjoy reading.

Other articles recommended

Good or Unusable Artificial Intelligence for Autonomous Driving

Mario Trapp, Executive Managing Director Fraunhofer Institute for Cognitive Systems on operating safety provisions, and in silico vs real world learning

Human Voices Revalued

On voice-assistants, clinical trials, pathologies, and the COVID-19 bet. Includes interview with Guy Fagherazzi, Luxembourg Institute of Health

Hello Taxi! Do you understand me?

Joaquín Nuño-Whelan, VP Hardware with Motional AD on lessons in developing autonomous driving with the robotaxi

Straight talking to MECA countries

Governance, anti-corruption, agriculture, digitization, citizen engagement, freedom of press, AMIS and Georgia

AI-enabled Digital Pathology: Correlate anything with everything?

Good Practice for Robotics and Artificial Intelligence Applications

EU abd U.S. codes of conduct - open access

Paradoxes and other dysfunctional cases. How autonomous driving works

Meeting safety AND performance criteria despite puzzles

Managers of Disease Meet Masters of Personal Health

GAIA-X: Thorny Path to Live Up to High Hopes

Analysis and in-depth interview with GAIA-X aisbl executives